Operating Coordinated Autonomous Vehicles in Complex and Unknown Environments

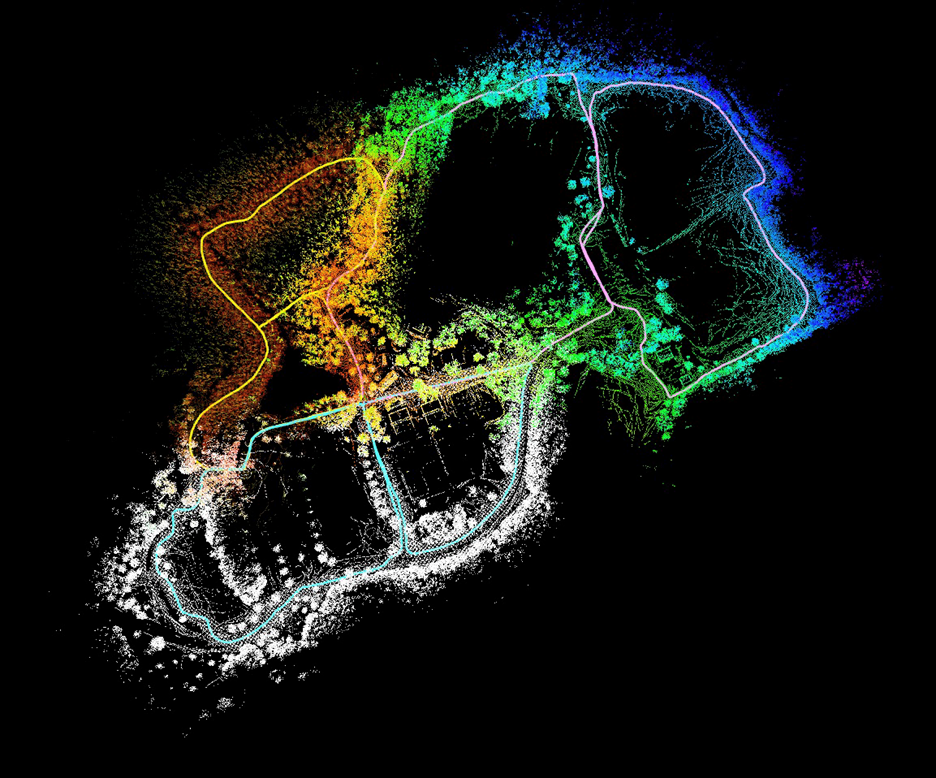

Working with U.S. Army DEVCOM Armaments Center to develop a coordinated team of intelligent unmanned ground vehicles, Dr. Brendan Englot, Associate Professor, Stevens Institute of Technology, presented work on developing the capability of multiple autonomous vehicles to produce LIDAR-based mapping while coordinating the exchange of information during exploration through unknown GPS denied environments. The aim of the research is to develop robust autonomy for unmanned underwater, ground and aerial vehicles in complex physical environments.

The goals of the project are threefold:

- Develop a multi-robot localization and mapping framework that allows a team of unmanned ground vehicles (UGVs) to build more accurate, comprehensive LIDAR-based maps in GPS denied environments by regularly exchanging information.

- Implement autonomous multi-robot exploration, which allows the UGVs to leverage their cooperative localization and mapping capability to efficiently build a complete map of an unknown, GPS-denied environment within a set of specified boundaries.

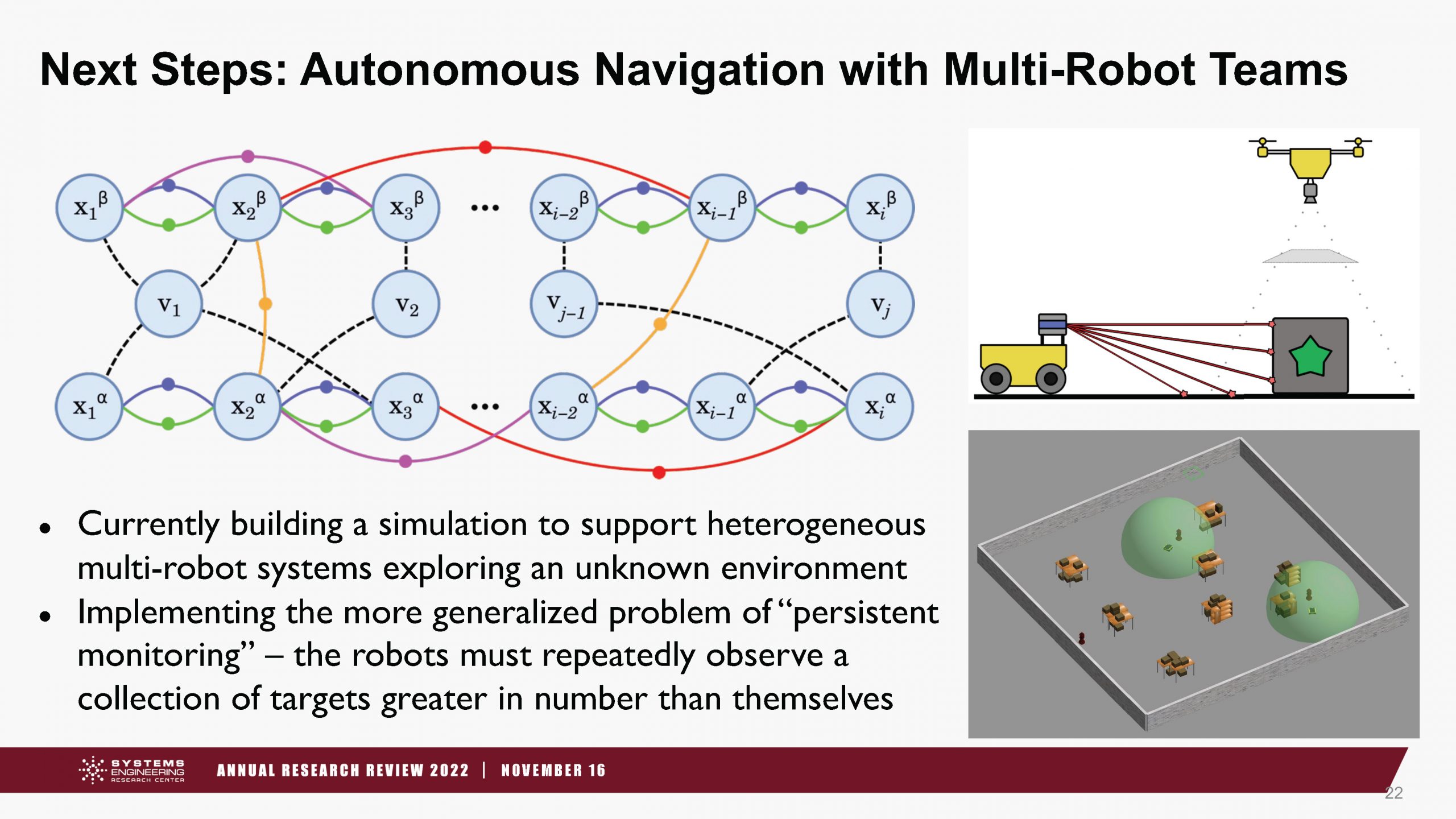

- Integrate machine learning tools into the above capabilities to facilitate both efficient planning and decision-making, using graph networks, and the construction of predictive perceptual data products, such as learning-enhanced grid maps and terrain traversability maps, that will enable high-performance motion planning.

The third goal of integrating machine learning is designed to overcome the challenges in computational complexity and scalability faced when using the traditional approach to developing this type of capability. The approaches taken by the team to improve performance and speed in real time applications were to minimize the size and frequency of data set transmission between units by using a tool that produces a more efficient compressed LIDAR descriptor data package, and to integrate machine learning for decision making in settings of interest.

Over the course of research, development and testing, Dr. Englot demonstrated the ability to deploy multiple robotic test vehicles in a distributed manner to communicate localization and effectively map an unknown area. This provided the foundation to introduce intelligent decision making, and led to the two key research questions on how to resolve for localization uncertainty and scalability: can a neural network be trained to perform belief propagation and make accurate predictions about localization uncertainty; and is there a compact abstraction that could be helpful for capturing different environments, distilling them down to an essential representation usable across multiple domains, robots and sensors?

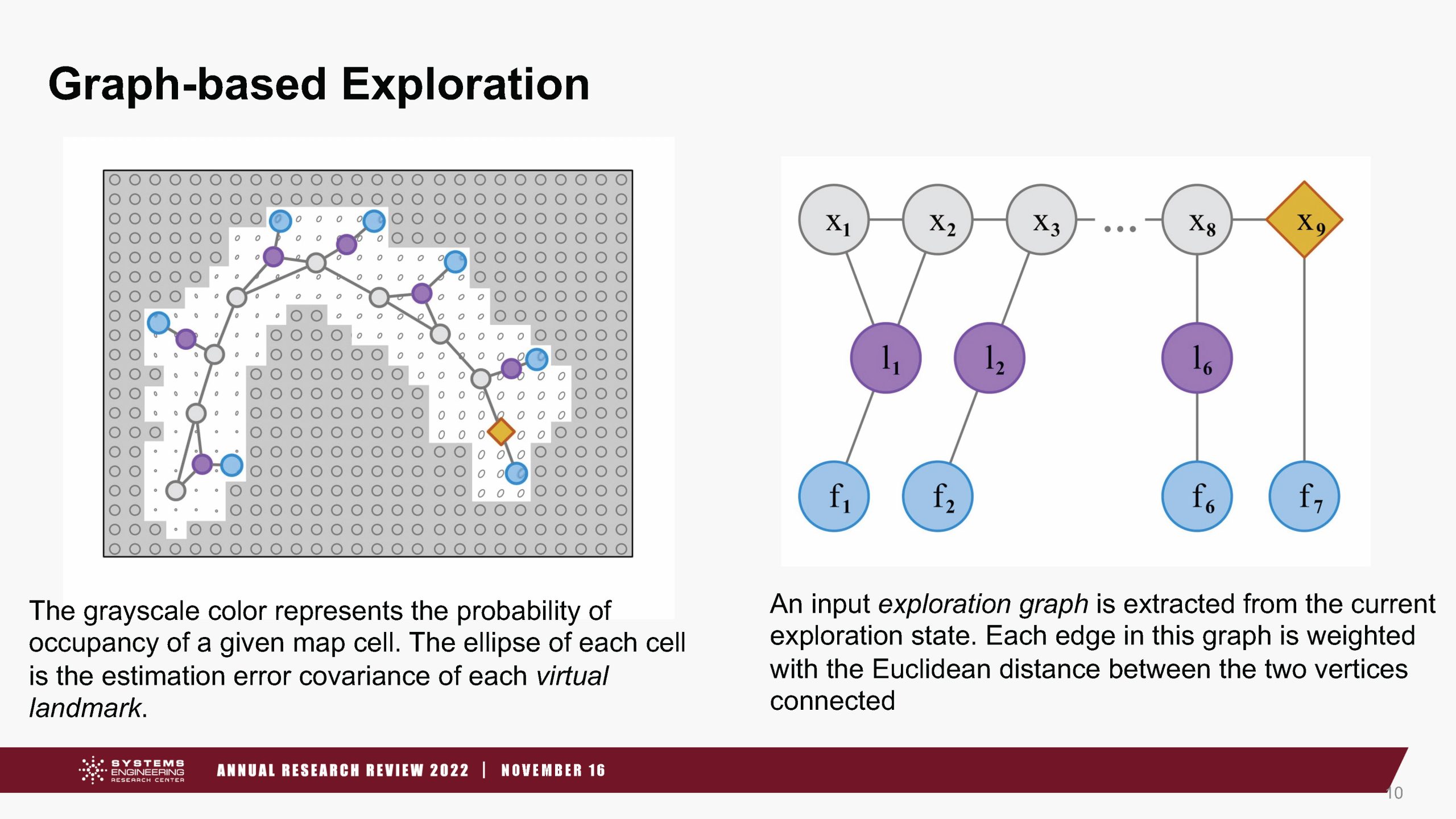

“Our hypotheses for this research is that we could use a graph representations and in turn graph neural networks to achieve that,” said Dr. Englot. To explore those questions the team developed an “Exploration Graph” with a single unified representation that captured the mobility, sensing capabilities, measurement constraints, ‘pose’ history, prior and current locations, information and landmarks in the environment, frontiers, and waypoints at the boundaries to test Deep Reinforcement Learning with Graph Neural Networks.

“Our hope is that this can be the vehicle through which we can teach a robot how to perform belief propagation and make good decisions over belief propagation that is compatible with deep reinforcement learning. One of the key things we are looking to achieve as well is scalability,” said Englot.

To learn more about this research contact Dr. Englot.